#docker port forwarding existing container

Explore tagged Tumblr posts

Text

Docker Container Port Mapping Tutorial for beginners | Docker Port Expose and Port Forwarding

Full Video Link: https://youtu.be/2gie3gpDJUg Hi, a new #video on #dockerportmapping is published on @codeonedigest #youtube channel. Learn docker container port forwarding and docker expose. What is docker port mapping and -p option. Running docker cont

Docker container port mapping and port forwarding. Docker expose attribute in Docker file is used to forward container port to the host machine. Running docker container on custom port. Using docker expose, run the docker application on specific port. How to run docker image on specific port? What is port mapping in docker container? Why docker port mapping is not working? Why containerized…

View On WordPress

#docker#docker and Kubernetes#docker compose#docker compose port mapping#docker container port change#docker container port forwarding not working#docker container port mapping#docker container port mapping explained#docker container port not exposed#docker container ports explained#docker port forwarding#docker port forwarding doesn’t work#docker port forwarding existing container#docker port forwarding running container#docker port mapping#docker port mapping explained#docker port mapping not working#docker port mapping tutorial#docker port mapping vs expose#docker tutorial#docker tutorial for beginners#port mapping in running docker container#run docker container on custom port#update docker container portainer#what is docker

0 notes

Text

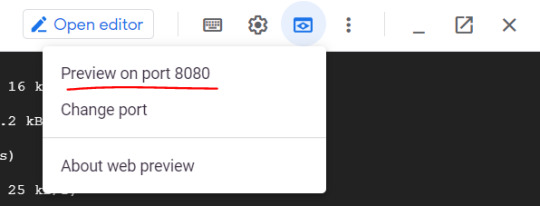

The reign of containerized applications is here and it is here indeed. Talking of Kubernetes, Docker, Podman, Openshift and the rest of important platforms that push for a containerized world, you will definitely need tools to make your work better. Whether it is visualizing your pods, your deployments, viewing your logs and resources, then you are in for a pleasurable treat. If you have a raw Kubernetes cluster and you feel the Kubernetes dashboard is not something you can work with, then this guide offers one alternative that you can look at and decide if it is something you can settle with. We proudly present VMware Octant. Before we jump into the pool, let us investigate what you can expect from its waters. VMware Octant is a tool for developers to understand how applications run on a Kubernetes cluster. It aims to be part of the developer’s toolkit for gaining insight and approaching complexity found in Kubernetes. Octant offers a combination of introspective tooling, cluster navigation, and object management along with a plugin system to further extend its capabilities. Impressive Features you will find. VMware Octant is a beauty peagent that gets brilliant cheers from its audience due to the following features: Visualization: VMware Octant provides a visual interface to managing Kubernetes that complements and extends existing tools like kubectl and kustomize. Extensibility: You can add information to your cluster views through Octant’s plug-in system. Versatility: VMware Octant supports a variety of debugging features such as filtering labels and streaming container logs to be part of the Kubernetes development toolkit. Port forward: Debug applications running on a cluster in a local environment by creating port forwards through an intuitive interface. Plug-ins over gRPC: With Octant’s plug-in API, core features can be extended to meet the needs of customized workflows on a cluster. Real-time updates: With a complete view of an object and all its related objects, you can more accurately assess the status of applications and avoid unfocused debugging when things go wrong Label Filter: Organize workloads with label filtering for inspecting clusters with a high volume of objects in a namespace. Log Stream: View log streams of pod and container activity for troubleshooting or monitoring without holding multiple terminals open. Can be installed on all platforms: That is Windows, Linux and macOS. Octant Plugins Plugins are a core part of Octant in the Kubernetes ecosystem. A plugin can read objects and allows users to add components to Octant’s views. With that said, it is the intention of this guide to get VMware Octant installed and explored as well as well get to see how some plugins can be added and utilized therein. Without further delays, I believe we are now comfortable and ready to plunge into the waters. How To Install VMware Octant To get VMware Octant installed, you can be in Windows, Linux or macOS systems and you will accrue the same benefits. The following are the steps involved in getting VMware Octant installed. Step 1: Update your server and install necessary applications Depending on your platform, simply update your Operating system to get the latest packages and patches as well as installing essential packages such as git ###For Ubuntu### sudo apt update && sudo apt upgrade sudo apt install vim git curl wget -y ###For CentOS### sudo yum update sudo yum install vim git curl wget -y Step 2: Install VMware Octant on Linux / macOS / Windows In this section you’ll be able to install VMware Octant on Linux, macOS and Windows. Install VMware Octant on Linux If you are on a distribution that supports .deb or .rpm, there are packages available for you. Depending on the architecture of your system simply visit the official releases page, download the packages matching your architecture and install them as follows: ##For DEB-based systems wget https://github.com/vmware-tanzu/octant/releases/download/v0.16.1/octant_0.16.1_Linux-64bit.deb

sudo dpkg -i octant_0.16.1_Linux-64bit.deb ##For RPM-based systems wget https://github.com/vmware-tanzu/octant/releases/download/v0.16.1/octant_0.16.1_Linux-64bit.rpm sudo rpm -ivh octant_0.16.1_Linux-64bit.rpm Installing VMware Octant on Windows If you are on Windows, you can get your Octant running as well. To make your work easier, VMWare Octant is available as packages that Chocolatey and Scoop can fetch and install. If you do not have Chocolatey installed, you can use this how to install Chocolatey guide to get it running quick. Install using Chocolatey choco install octant --confirm Install using Scoop ###Add the extras bucket. scoop bucket add extras ##Then Install Octant scoop install octant Installing VMware Octant on macOS For our macOS group of people, Homebrew always comes to the rescue and the following one-liner will make you happy: brew install octant Step 3: Getting Started with VMware Octant Octant is configurable through environment variables defined during runtime, here are some of the notable variables you will enjoy to use: i. Starting Octant with a given IP address and port Environment variables defined at runtime can for example be like the one shared below where we specify the IP address and port we would wish the application to listen from and be accessed by. The command below will cause Octant to be accessed from any IP and at port 8900. OCTANT_LISTENER_ADDR=0.0.0.0:8900 octant Running the above command on your terminal exposes Octant on the IP of your server on the port specified. If you have a firewall running, you should allow that port for access. Finally, point your browser to the ip and port (http::8900) and you should see an interface as shown below. If will first ask you for the contents of your cluster’s config file for it to connect to it. Paste the contents of your config file as illustrated below then hit “UPLOAD” button. Once connected, you will be ushered into your cluster in the default namespace You can view all of the namespaces that you have. And view the resources within each namespace Conveniently check out your Nodes: And much much more. Step 4: Adding Plugins to VMware Octant Installation Plugins are binaries that run alongside developer dashboard to provide additional functionality. Plugins are built using go-plugin in order to communicate with the dashboard over gRPC. They can read objects and allows users to add components to Octant’s views. Plugins can do the following: Add new tabs to the dashboard Include additional content to an existing summary section Create a new section in an existing tab Port forward to a running pod In order to add plugins, we will need to install Go. If you are on CentOS you can use: How To Install Go on CentOS 8 | CentOS 7 and those in Ubuntu can use Install Go (Golang) on Ubuntu. Once Go (Golang) is installed, run the following to install a sample plugin: $ cd ~ $ git clone https://github.com/vmware-tanzu/octant.git $ cd ~/octant/ $ go run build.go install-test-plugin 2020/10/23 17:19:45 Plugin path: /home/vagrant/.config/octant/plugins 2020/10/23 17:19:45 Running: /home/vagrant/.go/bin/go build -o /home/vagrant/.config/octant/plugins/octant-sample-plugin github.com/vmware-tanzu/octant/cmd/octant-sample-plugin Once the sample plugin has been installed, launch Octant once again and view the plugins section. You should see “Sample Plugin” as shown below. More details of the plugin can also be viewed as shared below. If you have any custom plugins, Octant can be extended. It is that flexible. Find more about VMWare Octant at its official webpage Culmination Desiring a tool that makes sure your work is lighter and better is all most developers are looking for to help them visualize and get all information they would need to see their applications scale and grow. It is without a doubt that VMWare Octant is a promise keeper and a vigorous performer.

It presents Kubernetes workloads and resources in a friendly and easy to follow fashion which will boost your work and take a load off your chest if you would wish to expand and diversify kubectl command. Get it installed and explore even more as you see if it is a tool you can keep and use.

0 notes

Text

Overview of GitOps

What is GitOps? Guide to GitOps — Continuous Delivery for Cloud Native applications

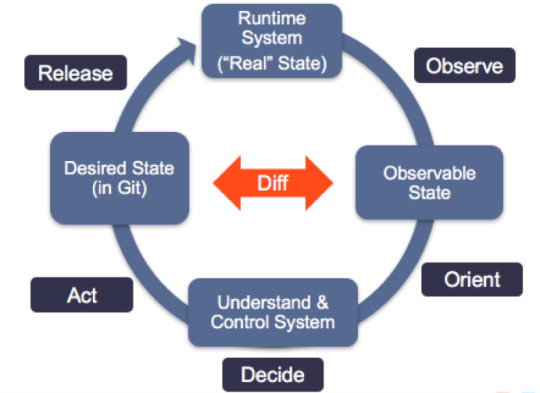

GitOps is a way to do Kubernetes cluster management and application delivery. It works by using Git as a single source of truth for declarative infrastructure and applications, together with tools ensuring the actual state of infrastructure and applications converges towards the desired state declared in Git. With Git at the center of your delivery pipelines, developers can make pull requests to accelerate and simplify application deployments and operations tasks to your infrastructure or container-orchestration system (e.g. Kubernetes).

The core idea of GitOps is having a Git repository that always contains declarative descriptions of the infrastructure currently desired in the production environment and an automated process to make the production environment match the described state in the repository. If you want to deploy a new application or update an existing one, you only need to update the repository — the automated process handles everything else. It’s like having cruise control for managing your applications in production.

Modern software development practices assume support for reviewing changes, tracking history, comparing versions, and rolling back bad updates; GitOps applies the same tooling and engineering perspective to managing the systems that deliver direct business value to users and customers.

Pull-based Deployments

more info @ https://gitops.tech

The Pull-based deployment strategy uses the same concepts as the push-based variant but differs in how the deployment pipeline works. Traditional CI/CD pipelines are triggered by an external event, for example when new code is pushed to an application repository. With the pull-based deployment approach, the operator is introduced. It takes over the role of the pipeline by continuously comparing the desired state in the environment repository with the actual state in the deployed infrastructure. Whenever differences are noticed, the operator updates the infrastructure to match the environment repository. Additionally the image registry can be monitored to find new versions of images to deploy.

Just like the push-based deployment, this variant updates the environment whenever the environment repository changes. However, with the operator, changes can also be noticed in the other direction. Whenever the deployed infrastructure changes in any way not described in the environment repository, these changes are reverted. This ensures that all changes are made traceable in the Git log, by making all direct changes to the cluster impossible.

In Kubernetes eco-system we have overwhelming numbers of tools to achieve GitOps. let me share some of the tools as below,

Tools

ArgoCD: A GitOps operator for Kubernetes with a web interface

Flux: The GitOps Kubernetes operator by the creators of GitOps — Weaveworks

Gitkube: A tool for building and deploying docker images on Kubernetes using git push

JenkinsX: Continuous Delivery on Kubernetes with built-in GitOps

Terragrunt: A wrapper for Terraform for keeping configurations DRY, and managing remote state

WKSctl: A tool for Kubernetes cluster configuration management based on GitOps principles

Helm Operator: An operator for using GitOps on K8s with Helm

Also check out Weavework’s Awesome-GitOps.

Benefits of GitOps

Faster development

Better Ops

Stronger security guarantees

Easier compliance and auditing

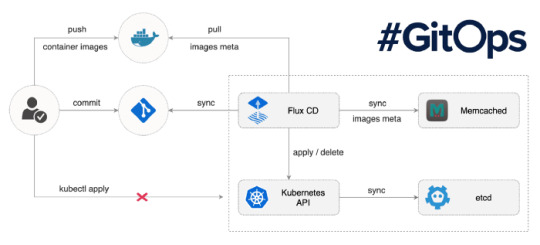

Demo time — We will be using Flux

Prerequisites: You must have running Kubernetes cluster.

Install “Fluxctl”. I have used Ubuntu 18.04 for demo.

sudo snap install fluxctl

2. Create new namespace called “flux”

kubectl create ns flux

3. Setup flux with your environmental repo. We are using repo “flux-get-started”.

export GHUSER="YOURUSER" fluxctl install \ --git-user=${GHUSER} \ --git-email=${GHUSER}@users.noreply.github.com \ [email protected]:${GHUSER}/flux-get-started \ --git-path=namespaces,workloads \ --namespace=flux | kubectl apply -f -

4. Set Deploy key in Github. You will need your public key.

fluxctl identity --k8s-fwd-ns flux

5. At this point you must have following pods, Services running on your cluster. (In “flux” and “demo” namespace)

namespace: flux

namespace: demo

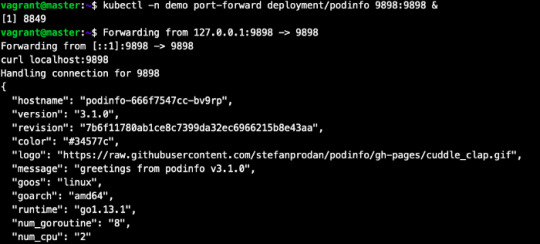

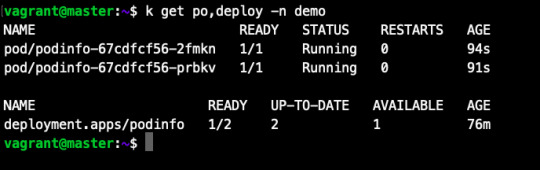

6. Let’s test what we have deployed.

kubectl -n demo port-forward deployment/podinfo 9898:9898 & curl localhost:9898

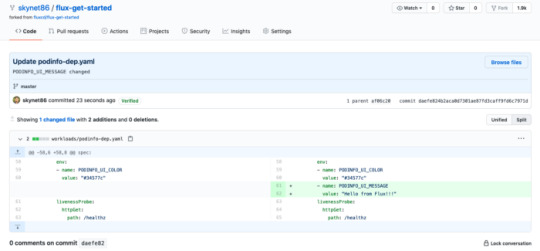

7. Now, lets make small change in repo and commit it to master branch.

By default, Flux git pull frequency is set to 5 minutes. You can tell Flux to sync the changes immediately with:

fluxctl sync --k8s-fwd-ns flux

Wow our changes from our repo has been successfully applied on cluster.

our changes from our repo has been successfully applied on cluster.

Let’s do one more test, assume that by mistake someone has reduced/deleted your pods on production cluster.

By default, Flux git pull frequency is set to 5 minutes. You can tell Flux to sync the changes immediately with:

fluxctl sync --k8s-fwd-ns flux

You have successfully restored your cluster in GitOps way. No Kubectl required!!

Whenever the deployed infrastructure changes in any way not described in the environment repository, these changes are reverted.

Thank You for reading.

Source:

#cloud native application#cloud app development#software development#mobile app development#kubernetes cluster#WeCode Inc#Japan

0 notes

Text

Xdebug Chrome

Xdebug is an extension for debugging your PHP. The following explains how to configure Xdebug and PhpStorm to debug in your local environment. You can use the IDE of your choice. See the vendor documentation for those applications for further configuration information.

Xdebug Helper

Xdebug Chrome Extension

Chrome Xdebug Not Working

Xdebug Chrome How To Use

You will surely like this chrome extension if you love debugging, profiling and tracing PHP code with Xdebug. It comes handy for PHP developers that loves using PHP tools with Xdebug support like PHPStorm, Eclipse with PDT, Netbeans and MacGDBp or any other Xdebug compatible profiling tool like KCacheGrind, WinCacheGrind or Webgrind. Enabling XDEBUG in Web Browsers Chrome. There are two really useful plugins for Chrome that you should install: XDEBUG Helper- This will enable you to turn XDEBUG on and off from your web browser without having to modify the URL. This is essential when debugging a POST type form submission where you can't just enter a URL.

You can configure Xdebug to run in the Magento Cloud Docker environment for local debugging without changing your Magento Commerce Cloud project configuration. See Configure Xdebug for Docker.

To set up Xdebug, you need to configure a file in your Git repository, configure your IDE, and set up port forwarding. You can configure settings in the magento.app.yaml file. After editing, you can push the Git changes across all Starter environments and Pro Integration environments to enable Xdebug. To push these settings to Pro plan Staging and Production environments, you must enter a ticket.

Once configured, you can debug CLI commands, web requests, and code. Remember, all Magento Commerce Cloud environments are read-only. You need to pull code to your local development environment to perform debugging. For Pro Staging and Production environments, we include additional instructions for Xdebug.

Requirements

To run and use Xdebug, you need the SSH URL for the environment. You can locate the information through the Project Web Interface or your Cloud Onboarding UI.

Configure Xdebug

To configure Xdebug, you need to do the following:

Work in a branch to push file updates

Configure your IDE, like PhpStorm

For configuring on Pro plan Staging and Production, you need to enter a ticket for Staging and Production.

Get started with a branch

To add Xdebug, we recommend creating a branch to work in and add the files.

To get started with environment branches:

On your local workstation, change to your Cloud project directory.

Switch to the Magento file system owner.

Log in to your Magento project.

List your projects.

List environments in the project. Every environment includes an active Git branch that contains your code, database, environment variables, configurations, and services.

It is important to use the magento-cloud environment:list command because it displays environment hierarchies, whereas the git branch command does not.

Fetch origin branches to get the latest code.

Checkout, or switch to, a specific branch and environment.

Git commands only checkout the Git branch. The magento-cloud checkout command checks out the branch and switches to the active environment.

You can create a new environment branch using the magento-cloud environment:branch <environment-name> <parent-environment-ID> command syntax. It may take some additional time to create and activate a new environment branch.

Use the environment ID to pull any updated code to your local. This is not necessary if the environment branch is new.

(Optional) Create a snapshot of the environment as a backup.

Enable Xdebug in your environment

To enable Xdebug for your project, add xdebug to the runtime:extensions section of the .magento.app.yaml file.

You can enable Xdebug directly to all Starter environments and Pro Integration environments. For Pro Staging and Production, you need to update this file and enter a Support ticket to have it enabled. We enable Xdebug on those environments for you.

To enable Xdebug:

In your local terminal, open the .magento.app.yaml file in a text editor.

In the runtime section, under extensions, add xdebug. For example:

Save your changes to the .magento.app.yaml file and exit the text editor.

Add, commit, and push the changes to redeploy the environment.

When deployed to Starter environments and Pro Integration environments, Xdebug is now available. You should continue configuring your IDE. For PhpStorm, see Configure PhpStorm.

Configure PhpStorm

You need to configure PhpStorm to properly work with Xdebug.

To configure PhpStorm to work with Xdebug:

In your PhpStorm project, open the settings panel.

Mac OS X—Select PhpStorm > Preferences.

Windows/Linux—Select File > Settings.

In the Settings panel, expand and locate the Languages & Frameworks > PHP > Servers section.

Click the + to add a server configuration. The project name is in grey at the top.

Configure the following settings for the new server configuration:

Name—enter the same as the hostname. This value is used in and must match the value for PHP_IDE_CONFIG variable in Debug CLI commands.

Host—Enter localhost.

Port—Enter 80.

Debugger—Select Xdebug.

Select Use path mappings. In the File/Directory pane, the root of the project for the serverName displays.

In the Absolute path on the server column, click (Edit) and add a setting based on the environment:

For all Starter environments and Pro Integration environments, the remote path is /app.

For Pro Staging and Production environments:

Production: /app/<project_code>/

Staging: /app/<project_code>_stg/

Change the Xdebug port to 9000 in the Languages & Frameworks > PHP > Debug > Xdebug > Debug Port panel.

Click Apply.

Set up port forwarding

You must map the XDEBUG connection from the server to your local system. To do any type of debugging, you must forward port 9000 from your Magento Commerce Cloud server to your local machine. See one of the following sections:

Port forwarding on Mac or UNIX

To set up port forwarding on a Mac or in a Unix environment:

Open a terminal.

Use SSH to establish the connection.

Add the -v option to the SSH command to show in the terminal whenever a socket is connected to the port that is being forwarded.

If an “unable to connect” or “could not listen to port on remote” error is displayed, there could be another active SSH session persisting on the server that is occupying port 9000. If that connection isn’t being used, you can terminate it.

To troubleshoot the connection:

Use SSH to log in to the remote Integration, Staging, or Production environment.

Enter who to view a list of SSH sessions.

View existing SSH sessions by user. Be careful to not affect a user other than yourself!

Integration: usernames are similar to dd2q5ct7mhgus

Staging: usernames are similar to dd2q5ct7mhgus_stg

Production: usernames are similar to dd2q5ct7mhgus

For a user session that is older than yours, find the pseudo-terminal (PTS) value, such as pts/0.

Kill the process ID (PID) corresponding to the PTS value.

Sample response:

To terminate the connection, enter a kill command with the process ID (PID).

Port forwarding on Windows

To set up port forwarding (SSH tunneling) on Windows, you must configure your Windows terminal application. For this example, we walk through creating an SSH tunnel using Putty. You can use other applications such as Cygwin. For more information on other applications, see the vendor documentation provided with those applications.

To set up an SSH tunnel on Windows using Putty:

If you have not already done so, download Putty.

Start Putty.

In the Category pane, click Session.

Enter the following information:

Hostname (or IP address) field: Enter the SSH URL for your Cloud server

Port field: Enter 22

In the Category pane, click Connection > SSH > Tunnels.

Enter the following information:

Source port field: Enter 9000

Destination field: Enter 127.0.0.1:9000

Click Remote

Click Add.

In the Category pane, click Session.

In the Saved Sessions field, enter a name for this SSH tunnel.

Click Save.

To test the SSH tunnel, click Load, then click Open.

If an “unable to connect” error displays, verify all of the following:

All Putty settings are correct

You are running Putty on the machine on which your private Magento Commerce Cloud SSH keys are located

Configure Pro Staging and Production

To complete configuration for Pro plan Staging and Production environments, you must enter a Support ticket to have Xdebug enabled and configured in Staging and Production environments.

We enable Xdebug in the environment. Be aware that this is a configuration change that requires us to redeploy your Staging and Production environments.

SSH access to Xdebug environments

For initiating debugging, performing setup, and more, you need the SSH commands for accessing the environments. You can get this information, through the Project Web Interface and your project spreadsheet.

Xdebug Helper

For Starter environments and Pro Integration environments, you can use the following Magento Cloud CLI command to SSH into those environments:

To use Xdebug, SSH to the environment as follows:

For example,

Debug for Pro Staging and Production

To use Xdebug specifically on Pro plan Staging and Production environment, you create a separate SSH tunnel and web session only you have access to. This usage differs from typical access, only providing access to you and not to all users.

You need the following:

SSH commands for accessing the environments. You can get this information, through the Project Web Interface or your Cloud Onboarding UI.

The xdebug_key value we set when configuring the Staging and Pro environments

To set up an SSH tunnel to a Staging or Production environment:

Open a terminal.

Clean up all SSH sessions.

Set up the SSH tunnel for Xdebug.

To start debugging using the environment URL:

To enable remote debugging, visit the site in the browser with the following added to the URL where KEY is value for xdebug_key:

This sets the cookie that sends browser requests to trigger Xdebug.

Complete your debugging with Xdebug.

When you are ready to end the session, you can use the following command to remove the cookie and end debugging through the browser where KEY is value for xdebug_key:

The XDEBUG_SESSION_START passed by POST requests are not supported at this time.

Debug CLI commands

This section walks through debugging CLI commands.

To debug CLI commands:

SSH into the server you want to debug using CLI commands.

Create the following environment variables:

These variables are removed when the SSH session ends.

Begin debugging

On Starter environments and Pro Integration environments, run the CLI command to debug.You may add runtime options, for example:

On Pro Staging and Production environments, you must specify the path to the Xdebug php configuration file when debugging CLI commands, for example:

For debugging web requests

The following steps help you debug web requests.

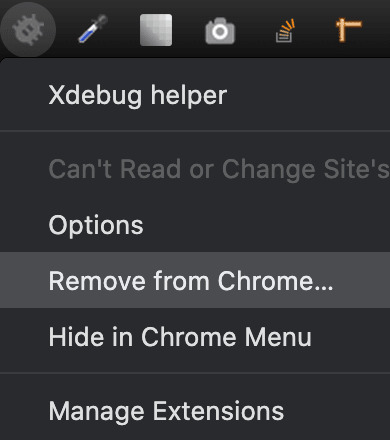

On the Extension menu, click Debug to enable.

Right click, select the options menu, and set the IDE key to PHPSTORM.

Install the Xdebug client on the browser. Configure and enable it.

Example set up on Chrome

This section discusses how to use Xdebug in Chrome using the Xdebug Helper extension. For information about Xdebug tools for other browsers, consult the browser documentation.

To use Xdebug Helper with Chrome:

Create an SSH tunnel to the Cloud server.

Install the Xdebug Helper extension from the Chrome store.

Enable the extension in Chrome as shown in the following figure.

In Chrome, right-click in the Chrome toolbar.

From the pop-up menu, click Options.

From the IDE Key list, click PhpStorm.

Click Save.

Open your PhpStorm project.

In the top navigation bar, click (Start listening).

If the navigation bar isn’t displayed, click View > Navigation Bar.

In the PhpStorm navigation pane, double-click the PHP file to test.

Debug code locally

Due to the read-only environments, you need to pull code locally from an environment or specific Git branch to perform debugging.

The method you choose is up to you. You have the following options:

Check out code from Git and run composer install

This method works unless composer.json references packages in private repositories to which you do not have access. This method results in getting the entire Magento codebase.

Copy the vendor, app, pub, lib, and setup directories

This method results in your having all code you can possibly test. Depending on how many static assets you have, it could result in a long transfer with a large volume of files.

Copy the vendor directory only

Because most Magento and third-party code is in the vendor directory, this method is likely to result in good testing although you will not be testing the entire codebase.

To compress files and copy them to your local machine:

Use SSH to login to the remote environment.

Compress the files.

For example, to compress the vendor directory only, enter

On your local environment, use PhpStorm to compress the files.

After spending way more time that I should like to admit trying to get XDEBUG to work with Notepad++ using the DBGp plugin in anything but the simplest of projects, I decided to look for an alternative.

I compared features of 9 different IDE's for PHP development and reading tons of reviews. There are many great IDE's out there and everyone will have their favourite. My short list ended up including:

Eclipse (PDT) 3.5 -- Didn't bother trying it. While I'm sure it's a fine product, the website is ancient and not user friendly. Way too much work to try and find information. I'm not sure if it is even being developed anymore. PDT isn't even listed in their main list of downloads. Although I didn't try Eclipse (PDT), I did try Aptana which is built on Eclipse but I don't want to have to struggle to figure out the user interface… I want to code!

NetBeans 8 -- Way better user interface than Eclipse in my opinion. It did take me a little to figure out the XDEBUG integration but I got it to work exactly as I expected. My problem was part technology and part learning what I wanted to do in NetBeans which was to initiate the debugging session from within my web browser without having to modify the URL.

Although both of these are free, I would not have minded paying for an IDE for PHP development since I do a fair amount of this for a living. Some of the try before you buy contenders included PhpStorm 7.13 and PHPDesigner 8.1.2.

They all have similar sets of features. PHPDesigner is even a native Windows app which is why I might still consider it.

I decided to give NetBeans a try. There are tons of stories on the Net from developers switching from Eclipse to NetBeans but very few going in the other direction. It includes almost all of the features I regularly use and plugins to fill in the gap. What was it missing? Like many of the cross-platform IDE's, it's a Java application which means it is slower than it could be on Windows. I also wish it had Timed Backups feature like in Notepad++.

Assuming you've downloaded and installed NetBeans, here's how to get it to work with XDEBUG:

Step 1 -- Configure PHP to work with XDEBUG

Modify your php.ini file. Start by commenting out anything that refers to ZEND… by adding a semi-colon in front (to the left) of the line. Zend is not compatible with XDEBUG.

Next comment out everything under the (xdebug) section. Then add the following, making sure to modify the lines that refer to your XAMPP path (doesn't work with the Lite version).

For 64-bit WAMP, the lines would be:

As you can see, the actual filename and location for the php_xdebug.dll file may vary. Check the ext or zend_ext folder to get the correct name for your version of AMP. If your version of AMP did not come with XDEBUG, Follow the instructions found on the XDEBUG Installation Wizard page.

Save your changes and restart Apache.

Step 2- Configure NetBeans to work with XDEBUG

The following instructions are for NetBeans 8 but it should be fairly similar with other versions:

Open your project in NetBeans. If you don't have one yet, create one.

Click Tools > Options > PHP.

Click the Debugging tab and make sure that:

Debugger port: 9000.

Session ID: netbeans-xdebug

Maximum Data Length: 2048

None of the checkboxes are checked.

Click OK.

Click File > Project Properties > Run Configuration > Advanced button.

Select Do Not Open Web Browser and click OK twice.

Important: You will need to repeat these last two steps anytime you create a new project.

You may need to close and restart NetBeans for the changes to take effect as I discovered that they don't always take effect right away when you save.

That's it. It should now be configured and ready to use. Next we will XDEBUG enable your web browser.

Importing Existing Source Code into NetBeans

Creating a new NetBeans project from an existing source code folder/files is easy. The following instructions describe how to create a new NetBeans project using existing source code and without storing NetBeans' own project files in your original source code directory tree. Looks like a lot of steps but it only takes about a minute to complete.

Click File > New Project.

For the Category, select the PHP.

For the Projects, select PHP Application with Existing Sources.

Click Next.

Specify the path to the Source Folder. This is where you source code currently exists.

Specify a project name. This can be anything as long as it is different from other existing NetBeans projects you might have.

Specify the version of PHP you will be using. If you aren't sure, type the following at a Command prompt or verify your log files: php --version . If PHP isn't in your path, you may need to change to the directory where the PHP.exe application is located.

The default UTF-8 should be correct for most situations.

To prevent NetBeans from storing it's files in your source code directory, check the 'Put NetBeans metadata into a separate directory' box and specify the folder where these files should be stored.

Click Next.

For Run As:, select Local Web Site (running on local web server) if it is not already selected.

Project URL should be the path to the localhost that corresponds to source directory specified above. It typically looks like http://localhost/ and may include a folder name if your local website is not in the root of the server.

For PHP, the default index file is typically called index.php unless you've changed it.

DO NOT check the 'Copy files from Source Folder to another location' checkbox if you want to work on your files where they currently reside.

Click Finish.

NetBeans will then create the project and open the default file.

Enabling XDEBUG in Web Browsers

Chrome

There are two really useful plugins for Chrome that you should install:

XDEBUG Helper -- This will enable you to turn XDEBUG on and off from your web browser without having to modify the URL. This is essential when debugging a POST type form submission where you can't just enter a URL.

XDEBUG Output Toggler -- This extension allows you to toggle the visibility of the extremely useful stack trace messages for your PHP.

Firefox

There are also two really useful plugins for Firefox that you should install (to be tested):

easyXdebug or The easiest Xdebug -- This will enable you to turn XDEBUG on and off from your web browser without having to modify the URL. This is essential when debugging a POST type form submission where you can't just enter a URL. I don't recommend installing both of these add-ons as they might conflict with each other since they do the same function.

XDEBUG Error Togger -- This is the same as XDEBUG Output Togger for Chrome. It allows you to toggle the visibility of the extremely useful stack trace messages for your PHP.

Internet Explorer

Unfortunately I don't know of any integration tools that work with IE. If you know if any, leave a comment below. You'll always be able to debug by appending ?XDEBUG_SESSION_START=netbeans-xdebug to the URL

Using XDEBUG in NetBeans

Open your project.

Debugging a Project: Right-click on the project you want to debug. Then click on Debug to start the debugger. There is a Debug Project icon at the top of the editor that you could use however it will not work for debugging a specific file. Debugging a specific file in a project or standalone file: Right-click on the file either in the navigation pane or even in the source code itself and then click Debug. If the file is already open, right click anywhere in the editor window and select Debug.

Set a breakpoint by clicking on the line number in the margin. Otherwise your code will be executed from start to end and nothing will happen in debugger.

Switch to your web browser and load the page you want to debug.

Click the add-on/plugin icon to enable Xdebug in your browser.

Reload the page you want to debug and switch back to NetBeans. You should now notice that the execution of the code has paused where you set the breakpoint above.

You can now use the debugging controls in the toolbar to step over, step into, step out, run to cursor, run, and stop debugging.

Xdebug Chrome Extension

You may notice that your PHP code runs slower when debugging. This is perfectly normal and a good reason to have a fast computer. On the positive side, you'll really notice where the slower parts of your code are.

Troubleshooting

If you can't get it to work, check to make sure that your timezone is properly set in /xampp/php/php.ini. I'm not sure why but it made a difference for me. It will also make dates appear correctly on your website.

Chrome Xdebug Not Working

(Date) ; Defines the default timezone used by the date functions ; Find the value for your location by visiting http://php.net/date.timezone date.timezone = America/Toronto

Xdebug Chrome How To Use

Related Posts:

0 notes

Text

What is Docker CE ? | learn how to install Dockers

What is docker

Docker is a computer program which is used to provide a running environment to run all kinds of application which are in docker hub, or created in docker. It creates an image of your application and stores all requirements of files into the container. Whenever we want to run docker application in any system, we have to run a single file without providing any other requirements.

Docker is easy to use in Ubuntu. It also supports Window and Mac operating system. For windows, it runs in Windows10/enterprise only. To use in Windows7/8/8.1 or Windows10 home should use docker toolbox.

There are two kind of docker software for programmers.

Docker CE :- Free community edition :- This is an open source software.

Docker EE :- Docker Enterprise Edition :- This is a paid software design for enterprise development and IT teams who build, ship, and run business-critical applications in production.

Requirements :-

Operating system (ubuntu)

Docker

Steps to install docker . Steps to download docker in ubuntu. 1. Open terminal and follow these command to install docker.

Just type docker and check if docker is in your system or not. $ docker

2. To check the version of operating system. To install Docker CE, we need the 64-bit version of one of these Ubuntu versions: 1. Cosmic 18.10 2. Bionic 18.04 (LTS) 3. Xenial 16.04 (LTS) $ lsb_release -a

3. Update the apt package index. $ sudo apt-get update

4. If requires, then install. $ sudo apt-get install

5. If docker is not in your system then install it. $ sudo apt-get install docker.io

6. Now check the staus of docker. $ sudo systemctl status docker

Steps to add user in docker 1. Why sudo :- We have to use 'sudo' command to run docker commands because docker container run user 'root'. We have to join the docker group, when your system join the docker group after that one can run docker command without sudo.

2. 'USER' is your system name, commands to add user as listed below. $user will pick system user 1. $ sudo groupadd docker 2. $ sudo gpasswd -a $USER 3. $ newgrp docker

3. Second way to add user in docker group. 1. $ sudo groupadd docker 2. $ sudo usermod -aG docker $USER

4. After adding a 'USER' into the docker group, we have to shut down or restart so that we can run docker commands without 'sudo'.

5. Command to uninstall docker. $ sudo apt-get remove docker docker-engine docker.io containerd runc

Docker commands 1.To check Docker version $ docker --version

2. To check Docker and containers info $ docker info

3. Find out which users are in the docker group and who is allowed to start docker containers. 1. $ getent group sudo

2. $ getent group docker

4. 'pull' command fetch the 'name_of_images' image from the 'Docker registry' and saves it to our system. $ docker pull busybox (busybox is name of image)

5. You can use the 'docker images' command to see a list of all images on your system. $ docker images

6. To find the location of the images in the system we need to follow some commands:- $ docker info path of docker:- "Docker Root Dir: /var/lib/docker"

Commands to check the images:-

$ cd /var/lib/docker

$ ls

pardise@pardise-MS-7817:/var/lib/docker$ cd image

bash: cd: image: Permission denied

Permission denied for all users

$ sudo su

$ root@pardise-MS-7817:/var/lib/docker# ls

Now docker info command will provide all details about images and containers

$root@pardise-MS-7817:/var/lib/docker/image/overlay2# docker info

7. Now run a Docker container based on this image. When you call run, the Docker client finds the image (busybox in this case), loads up the container and then runs a command in that container. $ docker run busybox

8. Now Docker client ran the 'echo' command in our busybox container and then exited it. $docker run busybox echo "hello from busybox"

9. Command to shows you all containers that are currently running. $ docker ps

10. List of all containers that one can run. Do notice that the STATUS column shows that these containers exited a few minutes ago. $ docker ps -a CONTAINER ID – Unique ID given to all the containers. IMAGE – Base image from which the container has been started. COMMAND – Command which was used when the container was started CREATED – Time at which the container was created. STATUS – The current status of the container (Up or Exited). PORTS – Port numbers if any, forwarded to the docker host for communicating with the external world. NAMES – It is a container name, you can specify your own name.

11. To start Container $ docker start (container id)

12. To login in Container $ docker attach (container id)

13. To stop container $ docker stop (container id)

Difference between images and containers

Docker Image is a set of files which has no state, whereas Docker Container is the abstract of Docker Image. In other words, Docker Container is the run time instance of images.

Remove images and containers 1. Docker containers are not automatically removed, firstly stop them, then can use docker rm command. Just copy the container IDs. $ docker rm 419600f601f9 (container_id)

2. Command to deletes all containers that have a status of exited. -q flag, only returns the numeric IDs and -f filters output based on conditions provided. $ docker rm $(docker ps -a -q -f status=exited)

3. Command to delete all container. $ docker container prune

4. Command to delete all images. To remove all images which are not referenced by any existing container, not just dangling ones, use the -a flag: $ docker images prune -a

dangling image is an image that is not tagged and is not used by any container. To remove dangling images type:-

$ docker images prune

$ docker rmi image_id image_id......

5. Removing all Unused Objects. It will remove all stopped containers,all dangling images,and all unused network. To remove all images which are not referenced by any existing container, use the -a flag: $ docker system prune -a

You can follow us and our codes at our github repository: https://github.com/amit-kumar001/You can follow us and our codes at our github

#What is docker#learn how to install Dockers#Steps to install docker#Difference between images and containers

0 notes

Text

Scaling ProxySQL rapidly in Kubernetes

Editor’s Note: Because our bloggers have lots of useful tips, every now and then we update and bring forward a popular post from the past. Today’s post was originally published on November 26, 2019. It’s not uncommon these days for us to use a high availability stack for MySQL consisting of Orchestrator, Consul and ProxySQL. You can read more details about this stack by reading Matthias Crauwels’ blog post How to Autoscale ProxySQL in the Cloud as well as Ivan Groenwold’s post on MySQL High Availability With ProxySQL, Consul and Orchestrator. The high-level concept is simply that Orchestrator will monitor the state of the MySQL replication topology and report changes to Consul which in turn can update ProxySQL hosts using a tool called consul-template. Until now we’ve typically implemented the ProxySQL portion of this stack using an autoscaling group of sorts due to the high levels of CPU usage that can be associated with ProxySQL. It’s better to be able to scale up and down as traffic increases and decreases. This ensures you’re not paying for resources you don’t need. This, however, comes with a few disadvantages. The first is the amount of time it takes to scale up. If you’re using an autoscaling group and it launches a new instance it will need to take the following steps: There will be a request to your cloud service provider for a new VM instance. Once the instance is up and running as part of the group, it will need to install ProxySQL along with supporting packages such as consul (agent) and consul-template. Once the packages are installed, they’ll need to be configured to work with the consul server nodes as well as the ProxySQL nodes that are participating in the ProxySQL cluster. The new ProxySQL host will announce to Consul that it’s available, which in turn will update all the other participating nodes in the ProxySQL cluster. This can take time. Provisioning a new VM instance usually happens fairly quickly — normally within a couple of minutes — but sometimes there can be unexpected delays. You can speed up package installation by using a custom machine image, but since there’s an operational overhead with keeping images up to date with the latest versions of the installed packages, it may be easier to do this using a script that always installs the latest versions. All in all, you can expect a scale-up to take more than a minute. The next issue is how deterministic this solution is. If you’re not using a custom machine image, you’ll need to pull down your config and template files from somewhere — most likely a storage bucket — and there’s a chance those files could be overwritten. This means the next time the autoscaler launches an instance it may not necessarily have the same configuration as the rest of the hosts participating in the ProxySQL cluster. We can take this already impressive stack and go a step further using Docker containers and Kubernetes. For those unfamiliar with containerization; a container is similar to a virtual machine snapshot but isn’t a full snapshot that would include the OS. Instead, it contains just the binary that’s required to run your process. You create this image using a Dockerfile; typically starting from a specified Linux distribution, then using verbs like RUN, COPY and USER to specify what should be included in your container “image.” Once this image is constructed, it can be centrally located in a repository and made available for usage by machines using a containerization platform like Docker. This method of deployment has become more and more popular in recent years due to the fact that containers are lightweight, and you know that if the container works on one system it will work exactly the same way when it’s moved to a different system. This reduces common issues like dependencies and configuration variations from host to host. Given that we want to be able to scale up and down, it’s safe to say we’re going to want to run more than one container. That’s where Kubernetes comes into play. Kubernetes is a container management platform that operates on an array of hosts (virtual or physical) and distributes containers on them as specified by your configuration; typically a YAML-format Kubernetes deployment file. If you’re using Google Kubernetes Engine (GKE) on Google Cloud Platform (GCP), this is even easier as the vast majority of the work in creating a Kubernetes deployment (referred to as a ‘workload’ in GKE) YAML is handled for you via a simple UI within the GCP Console. If you want to learn more about Docker or Kubernetes, I highly recommend Nigel Poulton’s video content on Pluralsight. For now, let’s stick to learning about ProxySQL on this platform. If we want ProxySQL to run in Kubernetes and operate with our existing stack with Consul and Orchestrator, we’re going to need to keep best practices in mind for our containers. Each container should run only a single process. We know we’re working with ProxySQL, consul (agent), and consul-template, so these will all need to be in their own containers. The primary process running in each container should run as PID 1. The primary process running in each container should not run as root. Log output from the primary process in the container should be sent to STDOUT so that it can be collected by Docker logs. Containers should be as deterministic as possible — meaning they should run the same (or at least as much as possible) regardless of what environment they are deployed in. The first thing in the list above that popped out is the need to have ProxySQL, consul-template and consul (agent) isolated within their own containers. These are going to need to work together given that consul (agent) is acting as our communication conduit back to consul (server) hosts and consul-template is what updates ProxySQL based on changes to keys and values in Consul. So how can they work together if they’re in separate containers? Kubernetes provides the solution. When you’re thinking about Docker, the smallest computational unit is the container; however, when you’re thinking about Kubernetes, the smallest computational unit is the pod which can contain one or more containers. Any containers operating within the same pod can communicate with one another using localhost ports. So in this case, assuming you’re using default ports, the consul-template container can communicate to the consul (agent) container using localhost port 8500, and it can communicate to the ProxySQL container using port 6032 given that these three containers will be working together in the same pod. So let’s start looking at some code, starting with the simplest container and working our way to the most complex. Consul (Agent) Container Below is a generic version of the Dockerfile I’m using for consul (agent). The objective is to install Consul then instruct it to connect as an agent to the Consul cluster comprised of the consul (server) nodes. FROM centos:7 RUN yum install -q -y unzip wget && yum clean all RUN groupadd consul && useradd -r -g consul -d /var/lib/consul consul RUN mkdir /opt/consul && mkdir /etc/consul && mkdir /var/log/consul && mkdir /var/lib/consul && chown -R consul:consul /opt/consul && chown -R consul:consul /etc/consul && chown -R consul:consul /var/log/consul && chown -R consul:consul /var/lib/consul RUN wget -q -O /opt/consul/consul.zip https://releases.hashicorp.com/consul/1.6.1/consul_1.6.1_linux_amd64.zip && unzip /opt/consul/consul.zip -d /opt/consul/ && rm -f /opt/consul/consul.zip && ln -s /opt/consul/consul /usr/local/bin/consul COPY supportfiles/consul.conf.json /etc/consul/ USER consul ENTRYPOINT ["/usr/local/bin/consul", "agent", "--config-file=/etc/consul/consul.conf.json"] Simply put, the code above follows these instructions: Start from CentOS 7. This is a personal preference of mine. There are probably more lightweight distributions that can be considered, such as Alpine as recommended by Google, but I’m not the best OS nerd out there so I wanted to stick with what I know. Install our dependencies, which in this case are unzip and wget. Create our consul user, group and directory structure. Install consul. Copy over the consul config file from the host where the Docker build is being performed. Switch to the consul user. Start consul (agent). Now let’s check the code and see if it matches best practices. Container runs a single process: The ENTRYPOINT runs Consul directly, meaning nothing else is being run. Keep in mind that ENTRYPOINT specifies what should be run when the container starts. This means when the container starts it won’t have to install anything because the packages come with the image as designated by the Dockerfile, but we still need to launch Consul when the container starts. Process should be PID 1: Any process run by ENTRYPOINT will run as PID 1. Process should not be run as root: We switched to the Consul user prior to starting the ENTRYPOINT. Log output should go to STDOUT: If you run Consul using the command noted in the ENTRYPOINT, you’ll see log output goes to STDOUT. Should be as deterministic as possible: We’ve copied the configuration file into the container, meaning the container doesn’t have to get support files from anywhere else before Consul starts. The only way the nature of Consul will change is if we recreate the container image with a new configuration file. There’s really nothing special about the Consul configuration file that gets copied into the container. You can see an example of this by checking the aforementioned blog posts by Matthias or Ivan for this particular HA stack. ProxySQL Container Below is a generic version of the Dockerfile I’m using for ProxySQL. The objective is to install ProxySQL and make it available to receive traffic requests on 6033 for write traffic, 6034 for read traffic and 6032 for the admin console which is how consul-template will interface with ProxySQL. FROM centos:7 RUN groupadd proxysql && useradd -r -g proxysql proxysql RUN yum install -q -y https://github.com/sysown/proxysql/releases/download/v2.0.6/proxysql-2.0.6-1-centos67.x86_64.rpm mysql curl && yum clean all COPY supportfiles/* /opt/supportfiles/ COPY startstop/* /opt/ RUN chmod +x /opt/entrypoint.sh RUN chown proxysql:proxysql /etc/proxysql.cnf USER proxysql ENTRYPOINT ["/opt/entrypoint.sh"] Simply put, the code above follows these instructions: Start from CentOS 7. Create our ProxySQL user and group. Install ProxySQL and dependencies, which in this case is curl, which will be used to poll the GCP API in order to determine what region the ProxySQL cluster is in. We’ll cover this in more detail below. Move our configuration files and ENTRYPOINT script to the container. Make sure the ProxySQL config file is readable by ProxySQL. Switch to the ProxySQL user. Start ProxySQL via the ENTRYPOINT script provided with the container. In my use case, I have multiple ProxySQL clusters — one per GCP region. They have to be logically grouped together to ensure they route read traffic to replicas within the local region but send traffic to the master regardless of what region it’s in. In my solution, a hostgroup is noted for read replicas in each region, so my mysql_query_rules table needs to be configured accordingly. In my solution, the MySQL hosts will be added to different host groups, but the routing to each hostgroup will remain consistent. Given that it’s highly unlikely to change, I have mysql_query_rules configured in the configuration file. This means I need to select the correct configuration file based on my region before starting ProxySQL, and this is where my ENTRYPOINT script comes into play. Let’s have a look at a simplified and more generic version of my code: #!/bin/bash dataCenter=$(curl https://metadata.google.internal/computeMetadata/v1/instance/zone -H "Metadata-Flavor: Google" | awk -F "/" '{print $NF}' | cut -d- -f1,2) ... case $dataCenter in us-central1) cp -f /opt/supportfiles/proxysql-us-central1.cnf /etc/proxysql.cnf ;; us-east1) cp -f /opt/supportfiles/proxysql-us-east1.cnf /etc/proxysql.cnf ;; esac ... exec proxysql -c /etc/proxysql.cnf -f -D /var/lib/proxysql The script starts by polling the GCP API to determine what region the container has been launched in. Based on the result, it will copy the correct config file to the appropriate location, then start ProxySQL. Let’s see how the combination of the Dockerfile and the ENTRYPOINT script allows us to meet best practices. Container runs a single process: ENTRYPOINT calls the entrypoint.sh script, which does some conditional logic based on the regional location of the container, then ends by running ProxySQL. This means at the end of the process ProxySQL will be the only process running. Process should be PID 1: The command “exec” at the end of the ENTRYPOINT script will start ProxySQL as PID 1. Process should not be run as root: We switched to the ProxySQL user prior to starting the ENTRYPOINT. Log output should go to STDOUT: If you run ProxySQL using the command noted at the end of the ENTRYPOINT script you’ll see that log output goes to STDOUT. Should be as deterministic as possible: We’ve copied the potential configuration files into the container. Unlike Consul, there are multiple configuration files and we need to determine which will be used based on the region the container lives in, but the configuration files themselves will not change unless the container image itself is updated. This ensures that all containers running within the same region will behave the same. Consul-template container Below is a generic version of the Dockerfile I’m using for consul-template. The objective is to install consul-template and have it act as the bridge between Consul via the consul (agent) container and ProxySQL; updating ProxySQL as needed when keys and values change in Consul. FROM centos:7 RUN yum install -q -y unzip wget mysql nmap-ncat curl && yum clean all RUN groupadd consul && useradd -r -g consul -d /var/lib/consul consul RUN mkdir /opt/consul-template && mkdir /etc/consul-template && mkdir /etc/consul-template/templates && mkdir /etc/consul-template/config && mkdir /opt/supportfiles && mkdir /var/log/consul/ && chown -R consul:consul /etc/consul-template && chown -R consul:consul /etc/consul-template/templates && chown -R consul:consul /etc/consul-template/config && chown -R consul:consul /var/log/consul RUN wget -q -O /opt/consul-template/consul-template.zip https://releases.hashicorp.com/consul-template/0.22.0/consul-template_0.22.0_linux_amd64.zip && unzip /opt/consul-template/consul-template.zip -d /opt/consul-template/ && rm -f /opt/consul-template/consul-template.zip && ln -s /opt/consul-template/consul-template /usr/local/bin/consul-template RUN chown -R consul:consul /opt/consul-template COPY supportfiles/* /opt/supportfiles/ COPY startstop/* /opt/ RUN chmod +x /opt/entrypoint.sh USER consul ENTRYPOINT ["/opt/entrypoint.sh"] Simply put, the code above follows these instructions: Start from CentOS 7. Install our dependencies which are unzip, wget, mysql (client), nmap-ncat and curl. Create our Consul user and group. Create the consul-template directory structure. Download and install consul-template. Copy the configuration file, template files and ENTRYPOINT script to the container. Make the ENTRYPOINT script executable. Switch to the Consul user. Start consul-template via the ENTRYPOINT script that’s provided with the container. Much like our ProxySQL container, we really need to look at the ENTRYPOINT here in order to get the whole story. Remember, this is multi-region so there is additional logic that has to be considered when working with template files. #!/bin/bash dataCenter=$(curl https://metadata.google.internal/computeMetadata/v1/instance/zone -H "Metadata-Flavor: Google" | awk -F "/" '{print $NF}' | cut -d- -f1,2) ... cp /opt/supportfiles/consul-template-config /etc/consul-template/config/consul-template.conf.json case $dataCenter in us-central1) cp /opt/supportfiles/template-mysql-servers-us-central1 /etc/consul-template/templates/mysql_servers.tpl ;; us-east1) cp /opt/supportfiles/template-mysql-servers-us-east1 /etc/consul-template/templates/mysql_servers.tpl ;; esac cp /opt/supportfiles/template-mysql-users /etc/consul-template/templates/mysql_users.tpl ### Ensure that proxysql has started while ! nc -z localhost 6032; do sleep 1; done ### Ensure that consul agent has started while ! nc -z localhost 8500; do sleep 1; done exec /usr/local/bin/consul-template --config=/etc/consul-template/config/consul-template.conf.json This code is very similar to the ENTRYPOINT file used for ProxySQL in the sense that it checks for the region the container is in, then moves configuration and template files into the appropriate location. However, there is some additional logic here that checks to ensure that ProxySQL is up and listening on 6032 and that consul (agent) is up and listening on port 8500. The reason for this is the consul-template needs to be able to communicate with both these hosts. You really have no assurance as to what container is going to load in what order in a pod, so to avoid excessive errors in the consul-template log, I have it wait until it knows that its dependent services are running. Let’s go through our best practices checklist one more time against our consul-template container code. Container runs a single process: ENTRYPOINT calls the entrypoint.sh script, which does some conditional logic based on the regional location of the container, then ends by running consul-template. This means at the end of the process consul-template will be the only process running. Process should be PID 1: The command “exec” at the end of the ENTRYPOINT script will start consul-template as PID 1. Process should not be run as root: We switched to the consul user prior to starting the ENTRYPOINT. Log output should go to STDOUT: If you run Consul using the command noted at the end of the ENTRYPOINT script, you’ll see log output goes to STDOUT. Should be as deterministic as possible: Just like ProxySQL and consul (agent), all the supporting files are packaged with the container. Yes, there is logic to determine what files should be used, but you have the assurance that the files won’t change unless you create a new version of the container image. Putting it all together Okay, we have three containers representing the three processes we need to package together so ProxySQL can work as part of our HA stack. Now we need to put it all together in a pod so Kubernetes can have it run against our resources. In my use case, I’m running this on GCP, meaning once my containers have been built they’re going to need to be pushed up to the Google Container Registry. After this we can create our workload to run our pod and specify how many pods we want to run. Getting this up and running can be done with just a few short and simple steps: Create a Kubernetes cluster if you don’t already have one. This is what provisions the Cloud Compute VMs the pods will run on. Push your three Docker images to the Google container registry. This makes the images available for use by the Kubernetes engine. Create your Kubernetes workload, which can be done simply via the user interface in the GCP console. All that’s required is selecting the latest version of the three containers you’ve pushed up to the registry, optionally applying some metadata like an application name, Kubernetes namespace, and labels, then selecting which cluster you want to run the workload on. Once you click deploy, the containers will spin up and, assuming there are no issues bringing the containers online, you’ll quickly have a functioning ProxySQL pod in Kubernetes that follows these high-level steps: The pod is started. The three containers will start. In Kubernetes, pods are fully atomic. All the containers start without error or the pod will not consider itself started. The consul-template container will poll consul (agent) and ProxySQL on their respective ports until it’s confirmed those processes have started, then consul-template will start. Consul-template will create the new SQL files meant to configure ProxySQL based on the contents of the Consul key / value store. Consul-template will run the newly created SQL files against ProxySQL via its admin interface. The pod is now ready to receive traffic. The YAML During the process of creating your workload, or even after the fact, you’ll be able to see the YAML you’d normally have to create with standard Kubernetes deployments. Let’s have a look at the YAML that was created for my particular deployment. apiVersion: apps/v1 kind: Deployment metadata: annotations: deployment.kubernetes.io/revision: "1" creationTimestamp: "2019-10-16T15:41:37Z" generation: 64 labels: app: pythian-proxysql env: sandbox name: pythian-proxysql namespace: pythian-proxysql resourceVersion: "7516809" selfLink: /apis/apps/v1/namespaces/pythian-proxysql/deployments/pythian-proxysql uid: 706c6284-f02b-11e9-8f3e-42010a800050 spec: minReadySeconds: 10 progressDeadlineSeconds: 600 replicas: 2 revisionHistoryLimit: 10 selector: matchLabels: app: pythian-proxysql env: sandbox strategy: rollingUpdate: maxSurge: 100% maxUnavailable: 25% type: RollingUpdate template: metadata: creationTimestamp: null labels: app: pythian-proxysql env: sandbox spec: containers: - image: gcr.io/pythian-proxysql/pythian-proxysql-proxysql@sha256:3ba95101eb7a5aac58523e4c6489956869865452d1cbdbd32b4186a44f2a4500 imagePullPolicy: IfNotPresent name: pythian-proxysql-proxysql-sha256 resources: {} terminationMessagePath: /dev/termination-log terminationMessagePolicy: File - image: gcr.io/pythian-proxysql/pythian-proxysql-consul-agent@sha256:7c66fa5e630c4a0d70d662ec8e9d988c05bd471b43323a47e240294fc00a153d imagePullPolicy: IfNotPresent name: pythian-proxysql-consul-agent-sha256 resources: {} terminationMessagePath: /dev/termination-log terminationMessagePolicy: File - image: gcr.io/pythian-proxysql/pythian-proxysql-consul-template@sha256:1e70f4b96614dfd865641bf75784d895a794775a6c51ce6b368387591f3f1918 imagePullPolicy: IfNotPresent name: pythian-proxysql-consul-template-sha256 resources: {} terminationMessagePath: /dev/termination-log terminationMessagePolicy: File dnsPolicy: ClusterFirst restartPolicy: Always schedulerName: default-scheduler securityContext: {} terminationGracePeriodSeconds: 30 status: availableReplicas: 2 collisionCount: 1 conditions: - lastTransitionTime: "2019-10-16T15:41:37Z" lastUpdateTime: "2019-11-11T15:56:55Z" message: ReplicaSet "pythian-proxysql-8589fdbf54" has successfully progressed. reason: NewReplicaSetAvailable status: "True" type: Progressing - lastTransitionTime: "2019-11-11T20:41:31Z" lastUpdateTime: "2019-11-11T20:41:31Z" message: Deployment has minimum availability. reason: MinimumReplicasAvailable status: "True" type: Available observedGeneration: 64 readyReplicas: 2 replicas: 2 updatedReplicas: 2 The first thing I have to point out is this is a LOT of YAML that we didn’t have to create given the Google Kubernetes Engine handled all of it. This is a huge part of easing the process which allows us to get our solution working so quickly. However, despite the fact that we have a lot of YAML created for us, there are still some occasions where we may need to modify this manually, such as working with Kubernetes Container Lifecycle Hooks, or working with requests or limits for hardware resources for individual containers in our pod. How do I access my ProxySQL instance? One consideration for Kubernetes is when pods are started and stopped they’ll get an ephemeral IP address, so you don’t want to have your applications connect to your pods directly. Kubernetes has a feature called a “service” that allows your pods to be exposed via a consistent network interface. This service can also handle load balancing, which is what I’m planning on using with my Kubernetes deployment. Adding a service to your GKE workload is very simple and can be added with a few clicks. Autoscaling As noted earlier in this post, before the implementation of Kubernetes for this solution, it was recommended to use cloud compute autoscaling groups to handle fluctuations in traffic. We want to include the same strategy with Kubernetes to ensure we have enough pods available to handle traffic demand. Including autoscaling in your workload is also fairly simple and can be done via the console UI. One important thing to note about scaling with Kubernetes is the time it takes to scale up and down. In the intro section of this post, I noted the process of adding and removing nodes from an autoscaling group and how that can take minutes to achieve depending on how quickly your cloud provider can stand up a new instance and the complexity of your configuration. With Kubernetes, I’ve seen my pods scale up in as little as three seconds and scale down in less than one second. This is part of what makes this solution so powerful. Considerations for Connections During Scale-Up and Down One important thing to note is, as the workload gains and loses pods, your connections to ProxySQL via the exposed service can be interrupted. The autoscaling documentation notes that this can cause disruption and your application needs to be able to handle this in much the same way it would have to for a cloud compute autoscaling group. You’ll want to ensure that your application has retry on database failure logic built in before incorporating Kubernetes autoscaling (or any autoscaling for that matter) as part of your data platform. Considerations for MySQL users in ProxySQL Three tables are replicated when working with ProxySQL cluster: mysql_servers, mysql_query_rules and mysql_users — meaning when a change to any of these tables is made on one of the nodes in the cluster, it will be replicated to all the other nodes. We really don’t need to worry about this when working with mysql_servers given that all nodes will get their mysql_server information from Consul via consul-template, so I’ve disabled this clustering feature. With my particular use case I don’t need to worry about mysql_query_rules either because, as noted earlier in this post, my traffic is being routed based on the port that traffic is being sent to. The rules for this are simple, and should not change, so I have it in the configuration file and I have disabled replicating this table, as well. The last table to consider is mysql_users and this is where things get interesting. Remember with Kubernetes it’s possible to have persistent storage, but we really want our containers to be as stateless as possible, so if we were to follow the Docker and Kubernetes philosophy as closely as possible we wouldn’t want to have our data persist. This falls into the whole cattle vs pets discussion when working with containers, but I digress. Let’s assume we’ve opted NOT to persist our ProxySQL data, typically stored in SQLite, and we lose all the pods in our Kubernetes cluster. It’s unlikely, but we always need to be ready for disaster. When the first pod comes up, it’s starting with a blank slate and this isn’t a problem considering it will get its initial set of mysql_server data from Consul via consul-template and its mysql_query_rules data from the config file. However, there is no source of truth for mysql_users data, so all that data would be lost. In this case, we need to incorporate some source of truth for the ProxySQL mysql_users table. It’s possible to use a cloud compute VM with ProxySQL installed to be an ever-present member of the cluster which could seed data for new joining pods. However, that breaks our construct of working specifically with containers. Plus, if you have a multi-cluster configuration like I do, where there’s one cluster in each region, you need one ProxySQL “master host” in each region. This is a bit of a waste considering it’s just acting as a source of truth for mysql_users, which likely will be the same across all clusters. My solution, in this case, is to leverage the source of truth we already have in place: Consul. If it’s already acting as a source of truth for mysql_servers, there’s no reason it can’t act as a source of truth for this as well. All I need is to have my MySQL users and password hashes (always stay secure) in Consul. I can then use consul-template to create these on new ProxySQL host, or change them as keys and values change. You may have noticed this in the ENTRYPOINT script in my consul-template container. To Cluster or Not To Cluster? I mentioned before that ProxySQL cluster handles the replication of three tables: mysql_users, mysql_query_rules and mysql_servers. Considering all three of these tables now have their own source of truth, we really don’t need to worry about replicating this data. As Consul receives change reports, it will update all the ProxySQL pods considering that all of them have consul (agent) and consul-template containers as part of the pod. With this in mind, I’ve opted to rely on my constructed sources of truth and reduce solution complexity by removing ProxySQL clustering; however, this is going to vary from use case to use case. Conclusion The solution implemented in this use case has required the inclusion of a lot of new technologies that MySQL DBAs may or may not have familiarity with: ProxySQL, Orchestrator, Consul, GTIDs, etc. We’ve made this solution a little more complex by adding Docker and Kubernetes to the stack, but I personally believe this complexity is worth it considering the higher degree of idempotency that is built into the solution, the lack of need for ProxySQL clustering and the speed in which scale-up and scale-down occurs. One last consideration is the simple need for learning how to incorporate containers into your stack. This is not my first blog post on container philosophy and implementation. I believe containers are going to become a greater part of the landscape for all of us — even us, the database professionals with our highly stateful technological challenges. If you haven’t already started educating yourself on these technologies, I would highly encourage you to do so to better prepare yourself for the shift from “Database Administrator” to “Database Reliability Engineer.” https://blog.pythian.com/proxysql-in-kubernetes/

0 notes

Text

Introducing rosetta-bitcoin: Coinbase’s Bitcoin implementation of the Rosetta API

By Patrick O’Grady

In June, we launched Rosetta as an open-source specification that makes integrating with blockchains simpler, faster, and more reliable. There are now 20+ blockchain projects working on a Rosetta implementation (Near, Cardano, Celo, Coda, Neo, Tron, Handshake, Oasis, Cosmos, Decred, Filecoin, Ontology, Sia, Zilliqa, Digibyte, Harmony, Kadena, Nervos, and Blockstack), five in-progress SDKs (Golang, JavaScript, TypeScript, Java, and Rust), and eight teams have made contributions to at least one of the Rosetta repositories on GitHub (rosetta-specifications, rosetta-sdk-go, and rosetta-cli).

Today, we are sharing a key contribution to this growing collection of implementations: rosetta-bitcoin.

Why Bitcoin?

Bitcoin is the bellwether for all of crypto, is the most popular blockchain, has the largest market capitalization, and most blockchain developers know how it works (so it is easier to understand how Rosetta can be implemented for other blockchains).

On another note, the reference implementation for Bitcoin (known as Bitcoin Core) doesn’t provide native support for many of the features integrators want. It is not possible to query account balances and/or UTXOs for all accounts, serve preprocessed blocks to callers so they don’t need to fetch all inputs to parse a transaction, nor to construct transactions without importing private keys onto the node (which isn’t practical for users that never bring private keys online). Often, these missing features drive integrators to run some sort of additional “indexing” software and implement their own libraries to handle transaction construction.

rosetta-bitcoin provides access to all these features, requires no configuration by default, and can be started with a single command. Furthermore, rosetta-bitcoin enables these features exclusively through RPC interaction with Bitcoin Core so we don’t need to maintain a fork of Bitcoin Core to enable this new functionality and easy configuration!

Rosetta API Refresher

rosetta-bitcoin implements both of the Rosetta API core components: the Data API and the Construction API. Together, these components provide universal read and write access to Bitcoin. We’ve included several diagrams below that outline the specific endpoints that any Rosetta API implementation supports. If you are interested in building on top of an implementation, we recommend using rosetta-sdk-go (which abstracts away these flows behind Golang functions).

The Data API consists of all the endpoints used to “get information” about a blockchain. We can get the networks supported by an implementation (which may be > 1 if a blockchain supports sharding or if it is a gateway to multiple networks), the supported operation types on each network, and the status of each network.

The Data API also allows for getting the contents of any block, getting a particular transaction in a block, and fetching the balance of any account present in a block. Rosetta validation tooling ensures that the balance computed for any account from operations in blocks is equal to the balance returned by the node (often called “reconciliation”).

Lastly, the Data API allows for fetching all mempool transactions and for fetching any particular mempool transaction. This is useful for integrators that want to monitor the status of their broadcasts and to inspect any incoming deposits before they are confirmed on-chain.

While the Data API provides the ability to read data from a blockchain in a standard format, the Construction API enables developers to write to a blockchain (i.e. construct transactions) in a standard format. To meet strict security standards, implementations are expected to be stateless, operate entirely offline, and support detached key generation and signing. We can derive an address from a public key (on blockchains that don’t require on-chain origination).